Successful eCommerce with Search Engine Optimization

If you want to successfully prevail in eCommerce with its own online shop beat the competition, can not do without search engine optimization. Too large, the number of competitors, and not just in areas such as fashion or cosmetics. Even in niche areas, the number of competing stores increases more and more, so that no shop operators must rely on his good value for money.

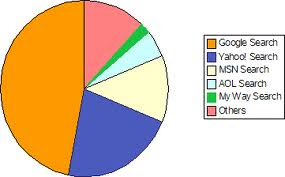

Finally, it is primarily about being found on the Internet at all. Most Internet users use the search engine Google to search for information or products. An entrepreneur who is not related to his shop in the top 10, it will be hard to attract customers; websites that are not listed in at least the first three search result pages to get as good as no more visitors from. So the goal is, with relevant keywords to be at the best possible location in the SERPs.

But what it is to be noted here? SEO for online shops differs significantly from SEO for blogs and other websites? Here are a few tips that will reinvent the proverbial wheel while not new, but often do not know enough attention.

Content remains king

Also in online stores good content is very important not only in blogs and Co. There are basically the same rules as for other websites also. The content should be interesting and unique, so you should not produce duplicate content. This problem occurs especially with product frequently. Product descriptions are often forwarded by the manufacturer to all the shops, and who published unchanged, is in good company of perhaps hundreds of other stores. Something Google does not like of course. It is better, therefore, to make the effort and wrote their own product or formulated the manufacturer at least for a little.

Have detailed and informative product descriptions, of course, pleased not only the search engines, but also the customers. One would like to finally know all the key data of a product before placing an order. Good product so also create trust - one more reason to early to worry about this aspect.

For large stores that have hundreds of products in the range, although it is a challenge not to be underestimated, each product to be provided with its own description. But it must not be written all at once. If the page is gradually supplemented by the important descriptions, this nevertheless brings the desired effect. One option is also to product pages that are still without text, for the time being be provided with a "noindex" tag. This can other product pages in the Google ranking benefit.

In addition to the product, there is another way to create content for online shops. The keyword is customer reviews. If customers can review products directly at the store, arises from the so-called user-generated content. He is not only free but also helps that the shop has to other trusted users, because most Internet users place relatively high value on the opinion of other users. In addition, entwine shops that integrate customer reviews into their product pages, usually better in Google. This is partly because the page thereby gaining a certain momentum, which is rated positively by Google.

Way: if you can not help but to produce duplicate content, for example, because he performs or offers products in several product categories as a PDF, should take appropriate measures to prevent this is rated negatively by Google. In the robots.txt file, you can specify which fields should not be read by the bots. With Canonical URLs to the search engine shows you which side is the "original", so that the other versions are not counted as duplicate content. These measures are simple and effective, but still remain far too often unused.

Choosing the right keywords and tags

Another important criterion that should be considered in the search engine optimization for online shops, is choosing the right keywords and tags for the various products and categories. Who can find the words to search for the user also has much better chances of being found through search engines. Clues to the search behavior of the users provide different tools, such as the Adwords tool from Google itself where you can also see which search volume have different keywords and how the competition looks like. Even synonyms or related terms can be displayed here to be inspired regarding reasonable alternatives.

In terms of the tags it is important to note that brand name and model number should be always in the title tag (and the H1 heading). Whoever offers many products in his shop, but you should try to create a page for each product unique title tag. A combination of make, model and type of product is often the best solution.

Who provides many images available, mostly doing its customers a big favor. However, we must not forget to name the images correctly and also to make use of the alt tags, otherwise Google may finally realize what the images contain.

The most important keywords should be included in the course description. But be careful, less is often more here: The so-called keyword stuffing is neither readers nor with search engines especially good.

Usability

Another aspect that must not be neglected, is the usability. Thus, the factors are meant that can affect the shopping experience of the customer. Of course every store owner wants to make it as pleasant as possible to its customers to buy from him. A clear design of the shop, a meaningful classification of the products into categories and an attractive appearance are the basic requirements to create a pleasant shopping experience for the user. Also, the loading times are an important point, which still often too little importance is attached. The longer it takes for a page is completely loaded, the higher the probability that the user loses patience and leaves the page. On the internet, competition is ultimately always just a click away.

Whoever succeeds as to guide the user through the various steps order that he feels comfortable and good advice (which the Internet is far more difficult than in store), is on a good path. Is often used, for example, the ability to directly display on the product page for more products in the store that would complement the currently viewed item well, or have purchased together with this product the other customers. In this way, you can provide the customer with the limited possibilities of the Internet some advice and maybe even make it to buy something more than he originally intended. It goes without saying that such offers can not be too pushy, not to annoy the customers.

What else is a good usability can also find out in a consultation with an online agency.

Conclusion

Search Engine Optimization for online stores designed to demanding and difficult than for conventional SEO Company websites or blogs in many ways. It is therefore advisable to hire a professional with the optimization of own shops - half-knowledge does not suffice in order to achieve results, it could even cause harm at worst. So to hire a professional web agency with experience is a worthwhile investment, with a high return on investment is achieved.